When Should I Use Actor-Critic Methods Over Other Reinforcement Learning Algorithms?

Reinforcement learning (RL) is a type of machine learning that allows an agent to learn how to behave in an environment by interacting with it and receiving rewards or punishments. RL algorithms are used in a wide variety of applications, such as robotics, game playing, and financial trading.

Advantages Of Actor-Critic Methods

Actor-critic methods are a class of RL algorithms that combine the advantages of policy gradient methods and value-based methods. Policy gradient methods directly optimize the policy, which is the mapping from states to actions. Value-based methods learn a value function, which estimates the expected return for taking a particular action in a given state.

By combining both approaches, actor-critic methods can achieve the best of both worlds. They can learn policies that are both effective and efficient.

Here are some of the benefits of using actor-critic methods:

- Policy Gradient Methods: Policy gradient methods are a direct way to optimize the policy. This can be done by using a gradient-based optimization algorithm, such as gradient ascent or gradient descent. Policy gradient methods are often used in continuous action spaces, where the agent can take any action within a certain range.

- Value-Based Methods: Value-based methods learn a value function, which estimates the expected return for taking a particular action in a given state. This information can be used to select actions that are likely to lead to high rewards. Value-based methods are often used in discrete action spaces, where the agent can only take a finite number of actions.

- Benefits of Combining Both Approaches: Actor-critic methods combine the advantages of both policy gradient methods and value-based methods. They can learn policies that are both effective and efficient. Actor-critic methods are often used in continuous action spaces, where the agent can take any action within a certain range.

When To Use Actor-Critic Methods

Actor-critic methods are a good choice for RL problems that have the following characteristics:

- Continuous Action Spaces: Actor-critic methods are well-suited for problems with continuous action spaces. This is because policy gradient methods can be used to directly optimize the policy in continuous action spaces.

- Non-Stationary Environments: Actor-critic methods are also a good choice for problems with non-stationary environments. This is because actor-critic methods can learn to adapt to changes in the environment over time.

- High-Dimensional State Spaces: Actor-critic methods can also be used to solve problems with high-dimensional state spaces. This is because actor-critic methods can learn to generalize across different states.

- Sparse Rewards: Actor-critic methods can also be used to solve problems with sparse rewards. This is because actor-critic methods can learn to explore the environment and find the actions that lead to high rewards.

Drawbacks Of Actor-Critic Methods

Actor-critic methods also have some drawbacks, including:

- Variance in Policy Updates: Actor-critic methods can suffer from variance in policy updates. This is because the policy is updated based on the gradient of the value function, which can be noisy.

- Sensitivity to Hyperparameters: Actor-critic methods can also be sensitive to hyperparameters. This means that the performance of the algorithm can be affected by the choice of hyperparameters, such as the learning rate and the discount factor.

- Off-Policy Training Difficulties: Actor-critic methods can also be difficult to train off-policy. This means that the algorithm may not be able to learn from data that was collected using a different policy.

Comparison With Other Reinforcement Learning Algorithms

Actor-critic methods are often compared to other RL algorithms, such as Q-learning, SARSA, Deep Q-Network (DQN), Policy Gradients, and Asynchronous Advantage Actor-Critic (A3C).

Here is a brief overview of these algorithms:

- Q-Learning: Q-learning is a value-based RL algorithm that learns a value function for each state-action pair. The algorithm then uses the value function to select actions that are likely to lead to high rewards.

- SARSA: SARSA is a value-based RL algorithm that is similar to Q-learning. However, SARSA uses a different update rule for the value function. This update rule makes SARSA more efficient than Q-learning in some cases.

- Deep Q-Network (DQN): DQN is a deep learning-based RL algorithm that uses a neural network to approximate the value function. DQN has been shown to achieve state-of-the-art results on a variety of RL problems.

- Policy Gradients: Policy gradients are a direct way to optimize the policy. This can be done by using a gradient-based optimization algorithm, such as gradient ascent or gradient descent. Policy gradient methods are often used in continuous action spaces.

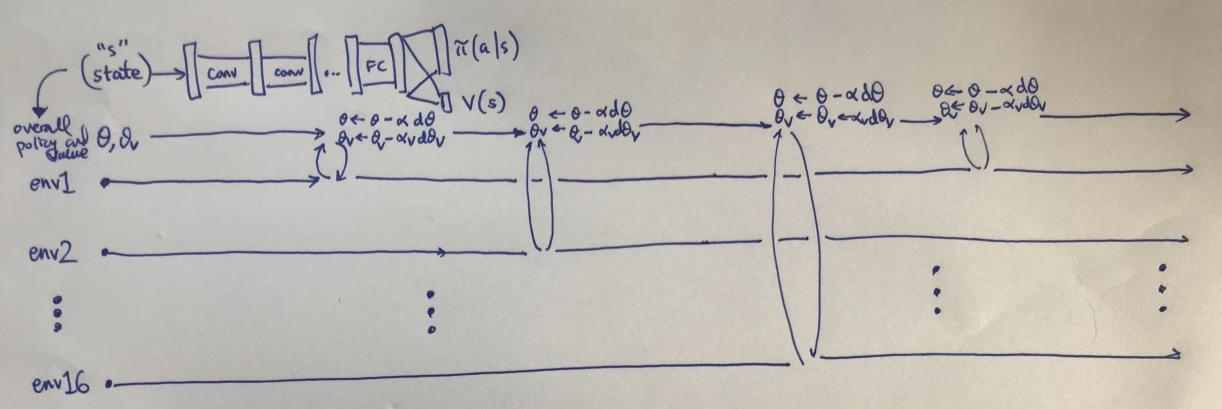

- Asynchronous Advantage Actor-Critic (A3C): A3C is an actor-critic method that uses asynchronous training. This means that the algorithm can learn from multiple experiences simultaneously. A3C has been shown to achieve state-of-the-art results on a variety of RL problems.

Summary Of Key Points

In this article, we have discussed the advantages and disadvantages of actor-critic methods. We have also compared actor-critic methods to other RL algorithms.

Here are some key points to remember:

- Actor-critic methods combine the advantages of policy gradient methods and value-based methods.

- Actor-critic methods are a good choice for problems with continuous action spaces, non-stationary environments, high-dimensional state spaces, and sparse rewards.

- Actor-critic methods can suffer from variance in policy updates, sensitivity to hyperparameters, and off-policy training difficulties.

- Actor-critic methods have been shown to achieve state-of-the-art results on a variety of RL problems.

Recommendations For Choosing An Algorithm

The choice of RL algorithm depends on the specific problem that you are trying to solve. However, here are some general recommendations:

- If you have a problem with a continuous action space, then you should consider using an actor-critic method or a policy gradient method.

- If you have a problem with a non-stationary environment, then you should consider using an actor-critic method or a SARSA method.

- If you have a problem with a high-dimensional state space, then you should consider using an actor-critic method or a DQN method.

- If you have a problem with sparse rewards, then you should consider using an actor-critic method or a Q-learning method.

Future Directions In Actor-Critic Methods

Actor-critic methods are a promising area of research in RL. There are a number of active research areas in actor-critic methods, including:

- Developing new actor-critic algorithms that are more efficient and robust.

- Applying actor-critic methods to new domains, such as robotics and finance.

- Developing theoretical foundations for actor-critic methods.

Actor-critic methods are a powerful tool for solving a wide variety of RL problems. As research in this area continues, we can expect to see actor-critic methods being used to solve even more complex and challenging problems.

YesNo

Leave a Reply