How Can Reinforcement Learning Be Used to Create Robots That Are More Safe and Reliable?

As robots become increasingly integrated into our lives, ensuring their safety and reliability is of paramount importance. Reinforcement learning (RL), a powerful machine learning technique, has emerged as a promising approach to developing robots that can operate safely and reliably in complex and dynamic environments.

Reinforcement Learning For Robot Safety

Overview Of RL Safety Methods:

- Risk-Aware RL: This approach incorporates risk assessment into the RL algorithm, enabling robots to learn policies that minimize the likelihood of accidents or injuries.

- Constrained RL: This technique involves defining safety constraints that the RL algorithm must adhere to while learning a policy. These constraints can be based on physical limitations, ethical considerations, or regulatory requirements.

- Inverse RL: This method involves learning a reward function that reflects the desired safe behavior for the robot. The RL algorithm then learns a policy that maximizes this reward function, leading to safer operation.

Benefits Of RL For Safety:

- Learning from Experience: RL algorithms can learn from their interactions with the environment, allowing them to adapt their behavior to ensure safety in various situations.

- Generalization to New Environments: RL algorithms can generalize their learned policies to new environments, making them suitable for deployment in diverse scenarios.

- Fault Tolerance: RL algorithms can be trained to handle unexpected events and recover from failures, enhancing the overall safety of robots.

Reinforcement Learning For Robot Reliability

Overview Of RL Reliability Methods:

- Fault-Tolerant RL: This approach involves training RL algorithms to be robust to faults and failures. The algorithm learns policies that can maintain safe and reliable operation even in the presence of component malfunctions or environmental disturbances.

- Lifelong RL: This technique enables RL algorithms to continuously learn and adapt throughout their lifetime. As the robot gains more experience, it can refine its policies to improve reliability and performance.

- Multi-Task RL: This approach involves training RL algorithms to perform multiple tasks simultaneously. By learning from multiple tasks, the algorithm can develop more generalizable and reliable policies.

Benefits Of RL For Reliability:

- Improved Performance over Time: RL algorithms can continuously learn and improve their performance over time, leading to increased reliability.

- Reduced Maintenance Costs: By learning to operate efficiently and reliably, RL algorithms can help reduce the need for frequent maintenance and repairs.

- Increased Uptime: RL algorithms can help robots operate reliably for longer periods, minimizing downtime and maximizing productivity.

Challenges And Limitations Of RL In Robotics

- Data Requirements: RL algorithms typically require large amounts of data to learn effective policies. This can be challenging to obtain in real-world robotics applications.

- Computational Complexity: RL algorithms can be computationally expensive, especially for complex tasks or large-scale environments.

- Transferability of Learned Policies: Learned policies may not generalize well to different environments or scenarios, requiring additional training or adaptation.

Case Studies And Applications

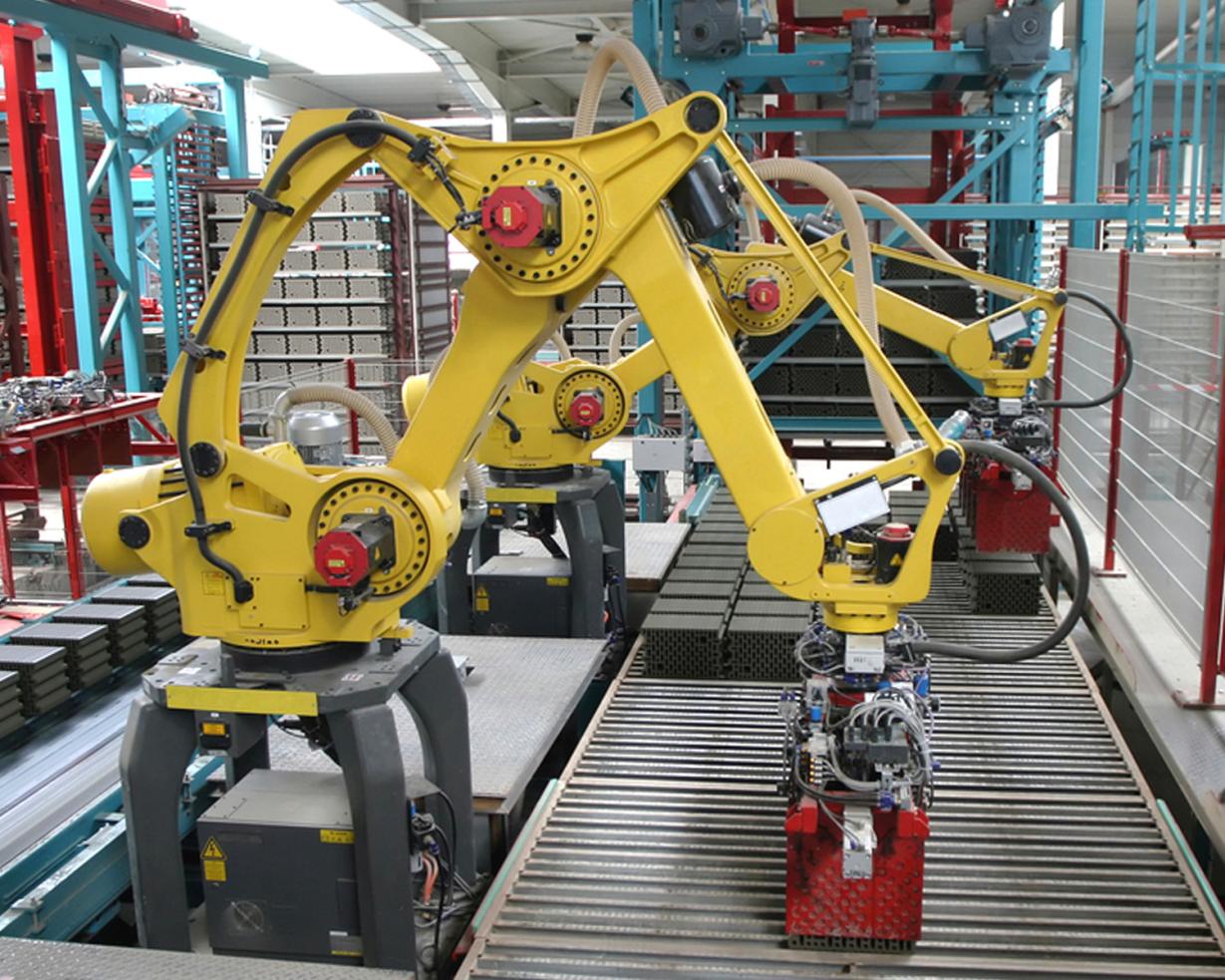

- Industrial Automation: RL has been used to train robots to perform complex tasks in industrial settings, such as assembly and welding, with improved safety and reliability.

- Healthcare: RL has been applied to develop surgical robots that can perform delicate procedures with high precision and minimal invasiveness.

- Autonomous Vehicles: RL has been used to train self-driving cars to navigate complex traffic scenarios safely and efficiently.

Reinforcement learning holds immense promise for creating robots that are safer and more reliable. By leveraging the ability of RL algorithms to learn from experience and adapt to changing environments, we can develop robots that can operate autonomously in a wide range of applications, from industrial automation to healthcare and autonomous vehicles. However, further research and development are needed to address challenges such as data requirements, computational complexity, and the transferability of learned policies. As these challenges are overcome, RL is poised to revolutionize the field of robotics and pave the way for a future where robots can safely and reliably coexist with humans.

YesNo

Leave a Reply